Artificial intelligence has come a long way in a short time. While the underlying technology has been around for decades, we’ve seen breakthrough after breakthrough in the level of intelligence demonstrated by AI in the past few years.

At the forefront of the AI evolution is OpenAI, with models like GPT-4 and tools like ChatGPT, but now they want to give those models legs in the form of robots from start-up Figure.

Sam Altman’s AI lab has invested millions in Figure, an AI robotics company building general purpose humanoid robots. It is now worth $2.6 billion and has investment from Microsoft, OpenAI, Nvidia, Intel, and Jeff Bezos — not Amazon, just Bezos.

What does this mean for AI and robotics?

For some time the fields of AI and robots have been joined by an invisible thread. Its clear they are meant to be one field but they’ve developed independently of one another.

Early in the days of OpenAI the company attempted to create a robotics division but found the hardware and software where still two distinct entities — AI wasn’t ready to give mind to machine. That changed in the past year with the rapid improvements in multimodality.

“We’ve always planned to come back to robotics and we see a path with Figure to explore what humanoid robots can achieve when powered by highly capable multimodal models,” said Peter Welinder, VP of Product and Partnerships at OpenAI.

Welinder added: “We’re blown away by Figure’s progress to date and we look forward to working together to open up new possibilities for how robots can help in everyday life.”

Multimodality basically means AI models can understand and interact with more than just text. Gemini from Google and GPT-4v are multimodal models in that they can take input as text, code, images, video, or speech and interpret them equally.

This is vital if you want to be able to have a robot do its own thing without humans having to write every single task it needs to perform before it performs that task.

What does this mean for Figure?

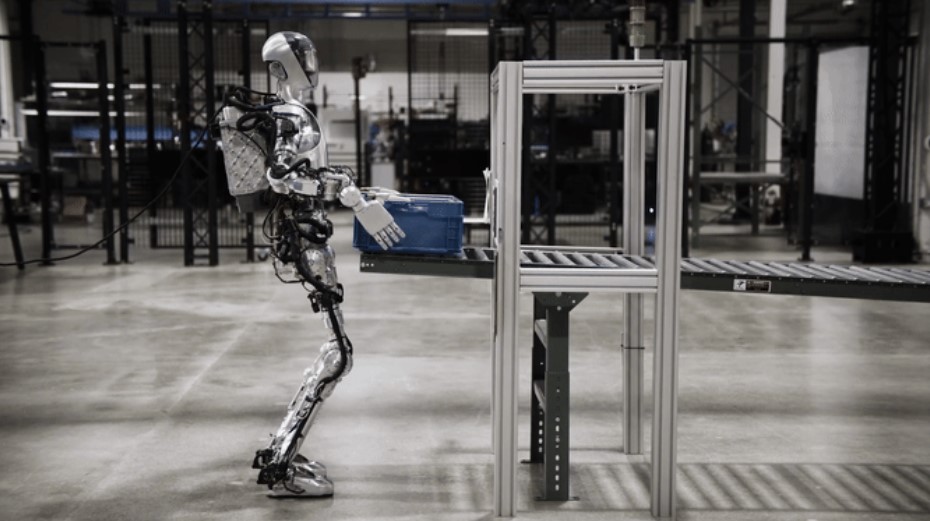

Figure AI was founded in 2022 with the goal of having robots able to work in manufacturing, shipping, logistics, warehousing, and even retail where “labor shortages are the most severe”.

While there are bots available for specific tasks within each of those industries, Figure is going down the humanoid route creating machines that can adapt as needed to different situations.

With technology at this level it is about two things — money and compute. The funding round takes its value above $2 billion and the partnership with OpenAI means models can be adapted that have already been trained and used to provide mind for machine.

The goal is to have Figure 01, their first android, to be able to perform a range of “every day tasks autonomously” and that is where the AI mind comes into play.

We’ve already seen experiments from university researchers, like Alter3, using OpenAI’s GPT-4 to help a robot learn poses and moves from a simple text prompt, the next step is full autonomy.

Why is AI in robotics a big deal?

Being able to have a robot work out what to do for itself straight from the factory belt — or at least after minimal training — is a game changer for industry.

Google has research divisions working on integrating AI into machine to allow the robot to learn every day tasks and perform functions it has never seen before by analyzing a scene.

Much of this comes down to advances in computer vision technology, particularly AI vision where the underlying model can take a real-world view from a camera, examine the situation, and make a judgement call on what is required.

“Our vision at Figure is to bring humanoid robots into commercial operations as soon as possible,” said Brett Adcock, Founder and CEO of Figure. He said the new investment ensures the company is “well-prepared to bring embodied AI into the world to make a transformative impact on humanity.”

Embodied AI is a term I think we’ll see a lot more. Basically it is ChatGPT with legs, or Google Gemini but with the ability to talk around in the real world and have a conversation.

If you weren’t worried about AI before, imagine what it will be like when ChatGPT can walk up to you for a chat and ask why you never said thank you for those pictures of dogs it made.